Full Automation By Ansible

Agent is a common way that IaaS software manage devices; for example, ZStack uses Python agents to manage KVM hosts. With massive devices, installing and upgrading agents become huge challenges, so most IaaS software leave this problem to customers or distribution vendors, which leads to fragile solutions because of lacking support from IaaS software themselves. ZStack took this problem into account in the very beginning, tried out Puppet, Salt, and Ansible successively, and finally integrated with Ansible seamlessly and transparently. All ZStack agents are automatically deployed, configured, and upgraded by Ansible; users may not even notice their existences.

The Motivation

An IaaS software is normally a united software consisting of many small pieces of programs. Ideally an IaaS software can be written as a central management software that talks to devices through devices' SDK; but in reality, devices either provide no SDK or incomplete SDK, imposing IaaS software have to deploy a small program called agent to control them. Even ZStack packages all services in a single process(see The In-Process Microservices Architecture), a few agents still need to be deployed to separate devices to control them. The process of deploying agents, which not only installs agents and dependent software but also configures the target devices, is not straightforward, and usually need to involve users doing a lot of manual work. This problem becomes prominent when the IaaS software manages tons of devices and can even restrict the scale of the data center.

The Problem

The problem of deploying, upgrading agents and configuring target devices is the configuration management problem that many software like Puppet, Chef, Salt, and Ansible aim to solve. Many developers have started using those tools to wrap IaaS software in an easy to deploy way; for example, to install the OpenStack, you will likely try to find some puppet module instead of manually doing every step following its documentation. Those thirdparty wrappers relieve the problem somehow, but they are also fragile, any changes in the software wrapped may break them. On the other side, when users want to configure a certain part of the software wrapped, they may have to dive into those thirdparty wrappers to make an ad-hoc change. Finally, the thirdparty wrappers cannot handle the situation of upgrading the software wrapped, turning users back to daunting details they try to hide.

With seamless and transparent integration with the configuration management software Ansible, ZStack solves this problem in a way of hiding details from users and taking the responsibility of managing agents, presenting users a software that can be simply downloaded and run(or upgraded), fulfilling the goal of automating everything in the data center, and helping administrators overcome the fear of installing, configuring, and upgrading their clouds.

Integration with Ansible

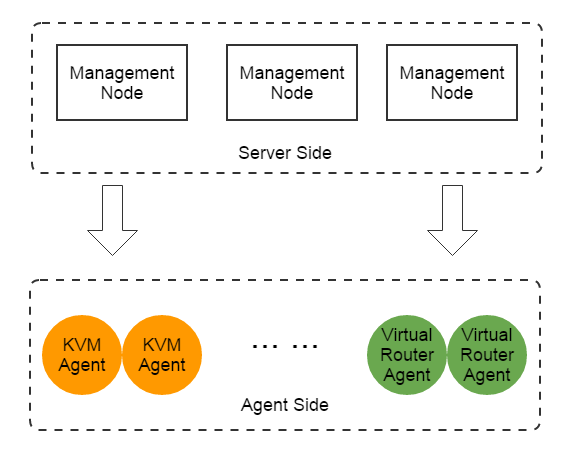

ZStack has a typical server-agent architecture that the server side contains all orchestration services driving business logic, and the agent side executes commands from the orchestration services by small Python agents(e.g. KVM agents, virtual router VM agents) running on separate devices.

Services and agents: ZStack has clear definitions for services and agents. A service is responsible for driving a part of business in the cloud, for example, storage service. A service is typically running in the process of ZStack management node, having own APIs and configurations, and cooperating with other services to run the business of the cloud. An agent is a small minion program ordered by a service to manipulate an external device that doesn't provide a decent SDK; for example, the SFTP backup storage agent builds a backup storage on a Linux machine using SFTP protocol. The design principal of services and agents is to put all complex business logic in services and make agents as dead simple as possible. We hope this explanation gives you an idea of what we call services and agents in ZStack because other IaaS software may have different ideas, we have seen some IaaS software handles business logic in agent code.

All ZStack agents contain three files: a Python package(xxx.tar.gz), an init.d service file, and an Ansible YAML

configuration, located in their own directories under $web_container_root/webapps/zstack/WEB-INF/classes/ansible, so a service can find

its agent through Java classpath. The Ansible YAML configuration wires all things together; it tells how to install the agent, dependencies of the

agent, and how to configure the target device. Citing KVM as an example, a part of its Ansible YAML configuration is like:

- name: install kvm related packages on RedHat based OS

when: ansible_os_family == 'RedHat'

yum: name=""

with_items:

- qemu-kvm

- bridge-utils

- wget

- qemu-img

- libvirt-python

- libvirt

- nfs-utils

- vconfig

- libvirt-client

- net-tools

- name: install kvm related packages on Debian based OS

when: ansible_os_family == 'Debian'

apt: pkg=""

with_items:

- qemu-kvm

- bridge-utils

- wget

- qemu-utils

- python-libvirt

- libvirt-bin

- vlan

- nfs-common

- name: disable firewalld in RHEL7 and CentOS7

when: ansible_os_family == 'RedHat' and ansible_distribution_version >= '7'

service: name=firewalld state=stopped enabled=no

- name: copy iptables initial rules in RedHat

copy: src="/iptables" dest=/etc/sysconfig/iptables

when: ansible_os_family == "RedHat" and is_init == 'true'

- name: restart iptables

service: name=iptables state=restarted enabled=yes

when: chroot_env == 'false' and ansible_os_family == 'RedHat' and is_init == 'true'

- name: remove libvirt default bridge

shell: "(ifconfig virbr0 &> /dev/null && virsh net-destroy default > /dev/null && virsh net-undefine default > /dev/null) || true"As showed above, the YAML configuration takes care of all settings about a KVM host. You don't need to worry about ZStack will ask you to install a lot of dependent software manually, and will not suffer any weird errors caused by lacking dependencies or by misconfiguration. It's ZStack's responsibility to make everything work on your Linux machines no matter they are Ubuntu or CentOS, even if you only install a minimal installation, ZStack knows how to get them ready.

Services in Java code can use an AnsibleRunner to call Ansible to deploy or upgrade agents at an opportune time. The

AnsibleRunner of KVM looks like:

AnsibleRunner runner = new AnsibleRunner();

runner.installChecker(checker);

runner.setAgentPort(KVMGlobalProperty.AGENT_PORT);

runner.setTargetIp(getSelf().getManagementIp());

runner.setPlayBookName(KVMConstant.ANSIBLE_PLAYBOOK_NAME);

runner.setUsername(getSelf().getUsername());

runner.setPassword(getSelf().getPassword());

if (info.isNewAdded()) {

runner.putArgument("init", "true");

runner.setFullDeploy(true);

}

runner.putArgument("pkg_kvmagent", agentPackageName);

runner.putArgument("hostname", String.format("%s.zstack.org",self.getManagementIp().replaceAll("\\.", "-")));

runner.run(new Completion(trigger) {

@Override

public void success() {

trigger.next();

}

@Override

public void fail(ErrorCode errorCode) {

trigger.fail(errorCode);

}

});The AnsibleRunner is very smart. It will track the MD5 sum of each agent file and test port connectivity of the agent on the remote device,

ensuring the Ansible will be only called when necessary. Normally, the process of deploying or upgrading an agent is transparent,

happening at trigger points defined by services; for example, a KVM agent will automatically get deployed when adding a new KVM host.

However, services also provide APIs called Reconnect API for users to trigger an agent deployment imperatively; for example,

users can call APIReconnectHostMsg to trigger a re-deployment of the KVM agent on a host in order to apply a critical security

fix for the Linux operating system, after they make corresponding changes in the KVM's YAML configuration. In future, ZStack will provide

a framework allowing users to execute their customized Ansible YAML configurations without modifying the default one in ZStack.

In the software upgrade, after users install a new version of ZStack and reboot all management nodes, the AnsibleRunner will detect

the MD5 sum of agents have changed and automatically upgrade them to external devices. This process is transparent and well-designed;

for example, the host service will upgrade 1000 KVM hosts each time if it detects there are totally 10,000 hosts, to

avoid exhaust resources of management nodes; of course, global configurations are provided to users for tuning the behavior (e.g. upgrade

100 hosts every time).

Summary

In this article, we demonstrated ZStack's seamless and transparent integration with Ansible. By this way, the process of agent installation, configuration, and upgrade is fully automated, keeping daunting details away from users but leaving them to ZStack itself.